BEAUFORT — The swift pace of technological development has given researchers tools that can collect more data in less time and with fewer resources than a decade ago.

Lightweight tags with long-lasting batteries can track animals as small as insects and measure the conditions around them. DNA sequencing technologies have decoded the genomes of thousands of organisms from the loblolly pine to the black bear. Drones can quickly photograph landscapes and animals in locations that may be inaccessible or unsafe for people.

Supporter Spotlight

Strategies for processing and analyzing this wealth of data must also keep up so scientists can efficiently interpret and share their findings. Automated methods using artificial intelligence are quickly progressing and becoming more necessary to extract useful information from collected data and visual images.

Researchers at the Duke University Marine Lab in Beaufort, along with collaborators from the University of North Carolina Chapel Hill, University of California Santa Cruz and Stanford University, have developed a new automated method to identify and measure whales in drone images.

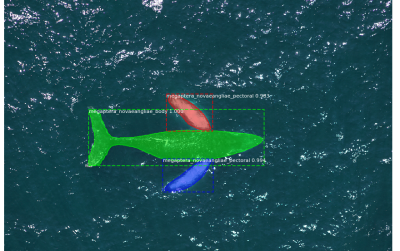

Published in Methods in Ecology and Evolution this summer, the method uses neural networks, a type of artificial intelligence and machine learning, to distinguish whale species and measure their length much quicker than a person can.

Information from these photos can teach researchers about whale health, behavior and population sizes and can ultimately help guide conservation and management strategies.

Patrick Gray, lead author and developer of the whale recognition software, said the project originated while his lab mates were amassing an overwhelming amount of photos from drone flights in Antarctica and off the California coast. Gray is a doctoral student in the Marine Robotics and Remote Sensing Lab led by professor David Johnston at the Duke Marine Lab.

Supporter Spotlight

Fellow doctoral student and project co-author Kevin “KC” Bierlich manages the huge imagery dataset for his dissertation research. As a drone pilot, Bierlich launches drones from a boat and captures photos of whales at opportune moments when they come to the ocean surface. He snaps hundreds of photos during each 10- to 15-minute drone flight, aiming for photos where the whale’s body is fully stretched out, rather than curved.

Back at the lab, Bierlich looks at each photo on a computer, selects the best images of each whale and then draws a digital line over the whale to measure the length in pixels. He converts photo pixels to an actual length by using the drone’s altitude and camera specifications to figure out the scale of each photo.

While Bierlich enjoys looking through the photos, managing and measuring thousands of images takes considerable time and effort that could instead be spent on interpreting and reporting their findings.

“That’s an opportunity cost,” he said.

Automating the whale identification and measurement process was an achievable goal due to continuing breakthroughs in machine learning. Machine learning refers to computer models being trained to do something, like recommending a new song for you, by finding patterns in large amounts of example data, like songs you and others already listen to.

“If you were to do this four years ago, it would be a two-year research undertaking with a team of computer scientists,” said Gray. Recent advancements in deep learning, a more powerful type of machine learning, allowed him to create the whale measurement program relatively quickly. The project started last summer, and it took Gray about two weeks of nonstop coding to build the neural network.

Deep learning relies on neural networks, computer systems that are especially useful for visual recognition since they can be trained with just labeled images. A common example of deep learning is facial recognition in applications like Facebook. The app learns to recognize someone’s features as people tag, or label, that person in photos. When you upload a new photo on Facebook, the app can then identify people in the photo and suggest you tag them (note: users can opt out of facial recognition).

To train the neural network, Gray fed it drone images that the researchers had already marked with the whale’s location and had labeled with the whale species name – either blue, minke or humpback.

Though they had a few hundred images labeled, ideally they needed many more to properly train the system. Gray therefore pretrained the neural network with a publicly available dataset from Microsoft of more than 300,000 images that were labeled with objects they contained, like toothbrushes and clocks. Although they didn’t include whales, these images could still train the network to detect objects in an image.

To measure the whale’s length after identifying it, the program extracts the whale’s body as pixels and uses a math algorithm to find the longest line through the whale.

The researchers then ran the program with a new set of whale photos to compare results from the automated program with results they found by hand. The system was exceptionally accurate in distinguishing between the three whale species, correctly predicting 98% of the images. The system also gives a confidence score with each species prediction, which can alert researchers if the system isn’t confident in its prediction and might need assistance from human eyes.

The computer’s length measurements were also quite accurate, within 5% of the manual measurements. In photos where the automated measurements differed considerably from measurements done by hand, the whale was obscured by something in the photo such as a boat or water being sprayed from the whale. If the neural network was trained with images that included other objects like boats, waves, or ice chunks, then measurement accuracy would likely improve.

While automation is accurate and saves time, Bierlich noted that manual processing also has benefits. By looking at each image, he can notice specific details that spur new research questions, or can spot things that the computer may not have been trained to recognize.

This type of automated program is the first of its kind, not only identifying the whales in images but also measuring their lengths. The program can be further modified for other research applications, like detecting other organisms, measuring additional features of an animal, and detecting an animal’s movement patterns in videos.

“The dream would be to put this system on the drone,” Gray said, which could reduce the need to sort through images back in the lab. Having direct feedback from the drone about image quality and an animal’s measurements could help direct the scientists’ research strategies while they’re still out on the water.

The code for the neural network is publicly available for others to use and modify, following the open-source culture that’s allowed the field of machine learning to quickly develop.

“We want people to run with it, we want people to expand upon it,” Bierlich explained.

Other co-authors on this study include Sydney Mantell, a Morehead-Cain Scholar at UNC; Ari Friedlaender of UC Santa Cruz’s Institute of Marine Sciences; Jeremy Goldbogen of Stanford University’s Hopkins Marine Station; and Johnston of the Duke Marine Lab. Marine Robotics and Remote Sensing Lab engineer Julian Dale and research assistant Clara Bird also assisted with the project.